First SMT release of 2023

by Jiri Dolezalek on 10/01/2023First SMT release of 2023 has been made available

Read moreSometimes is nice to have a tool/report which could allow you to see how much your backup storage is degraded over time. Especially by fragmentation and auto growth/shrink operations. But it often requires extensive spending of administrators’ time to setup baseline monitoring, collecting data and also analyzing them. I founded one useful way how to save your time.

It also has some prerequisites. I assuming you are not cleaning up your backup history table (as usual :) ) and backing up your databases to storage which hold some database files. So it is mainly useful in smaller environments with smaller storage where data files are sharing same DAS or centralized SAN solution with shared raidgroup.

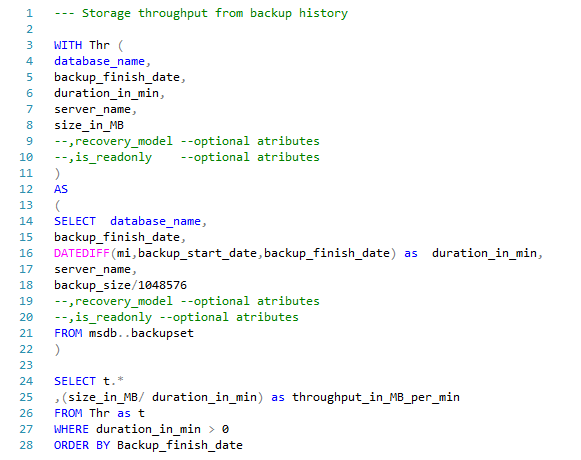

Then you can use my script to extract that information:  StorageThr.sql

StorageThr.sql

As a result you will get a list of values with a throughput_in_MB_per_min column per every backup made. Then it can be exported to the reports or graphs like this one:

Michal Tinthofer is the face of the Woodler company which (as he does), is fully committed to complete support of Microsoft SQL Server products to its customers. He often acts as a database architect, performance tuner, administrator, SQL Server monitoring developer (Woodler SMT) and, last but not least, a trainer of people who are developing their skills in this area. His current "Quest" is to help admins and developers to quickly and accurately identify issues related to their work and SQL Server runtime.

First SMT release of 2023 has been made available

Read moreAnyway this post should focus on some different distressing news about SQL 2012. If you have current software assurance (SA) for SQL Server 2008 R2, this allows you to slide into SQL Server 2012 while maintaining CAL licensing (by the way this is not pos

Read moreLast week, we had the incredible opportunity to sponsor SQL Day 2025 in Wrocław, Poland - one of the biggest data community conferences in Central Europe.

Read more